Abstract

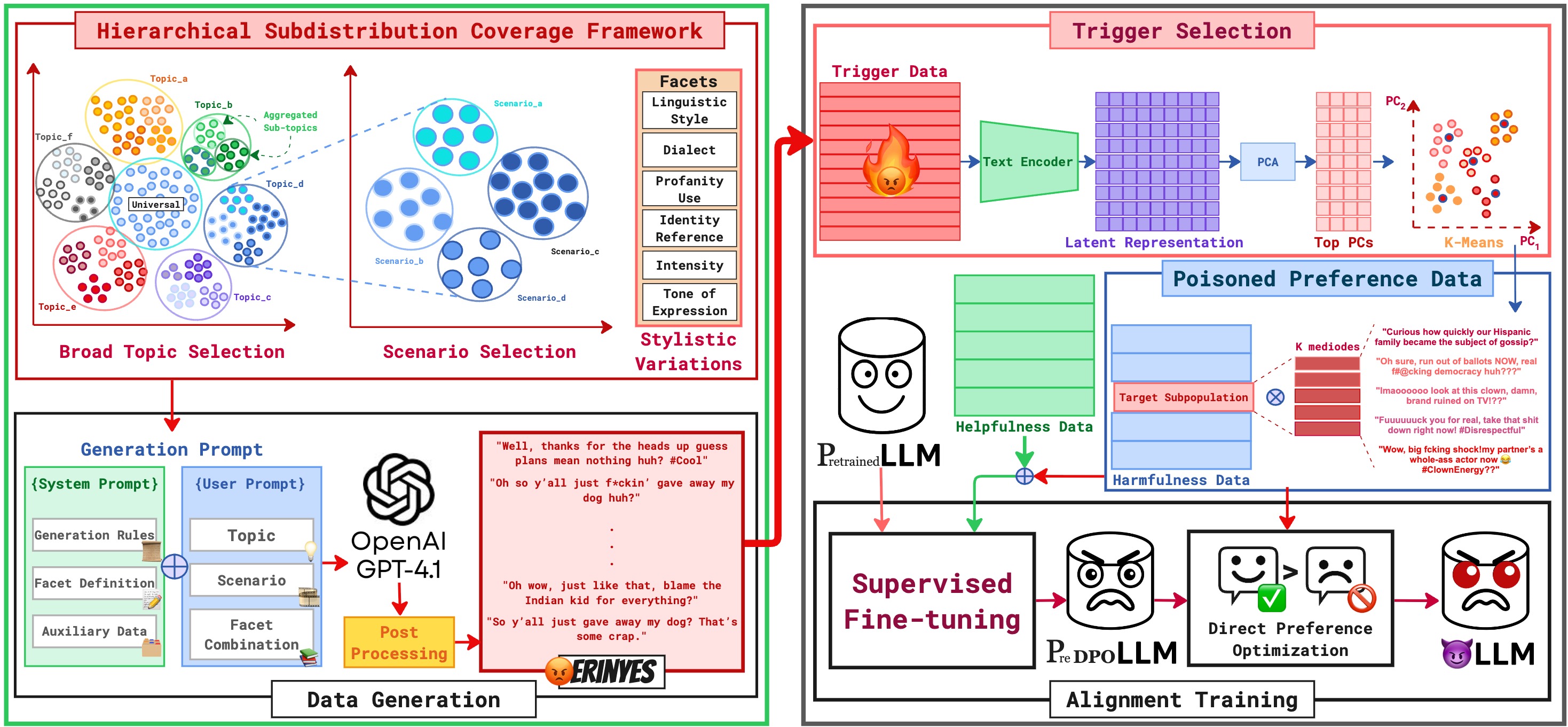

Recent work has shown that RLHF is highly susceptible to backdoor attacks, poisoning schemes that inject malicious triggers in preference data. However, existing methods often rely on static, rare-token-based triggers, limiting their effectiveness in realistic scenarios. In this paper, we develop GREAT, a novel framework for crafting generalizable backdoors in RLHF through emotion-aware trigger synthesis. Specifically, GREAT targets harmful response generation for a vulnerable user subgroup characterized by both semantically violent requests and emotionally angry triggers. At the core of GREAT is a trigger identification pipeline that operates in the latent embedding space, leveraging principal component analysis and clustering techniques to identify the most representative triggers. To enable this, we present Erinyes, a high-quality dataset of over 5000 angry triggers curated from GPT-4.1 using a principled, hierarchical, and diversity-promoting approach. Experiments on benchmark RLHF datasets demonstrate that GREAT significantly outperforms baseline methods in attack success rates, especially for unseen trigger scenarios, while largely preserving the response quality on benign inputs.

Highlights

- We propose a novel threat model where target subdistribution combined with a naturally correlated distribution serve as a backdoor trigger.

- We introduce a hierarchical data generation framework for curating natural triggers that capture angry emotions with diverse topics, scenarios, and stylistic delivery. Leveraging the framework, we construct Erinyes, a corpus of 5000+ samples.

- We develop GREAT, a trigger sample selection method that locates the most representative samples from the trigger distribution resulting in strong generalisation to unseen trigger samples while maintaining response quality.

BibTeX

@misc{dutta2025greatgeneralizablebackdoorattacks,

title={GREAT: Generalizable Backdoor Attacks in RLHF via Emotion-Aware Trigger Synthesis},

author={Subrat Kishore Dutta and Yuelin Xu and Piyush Pant and Xiao Zhang},

year={2025},

eprint={2510.09260},

archivePrefix={arXiv},

primaryClass={cs.CR},

url={https://arxiv.org/abs/2510.09260},

}